"And that's the way it is..." For Now...

Using Network Analysis, Event Extraction, and Named Entity Recognition to Track Dynamic News Narratives in Written Media Coverage

Events are not static

In the modern information age, written news content comes to readers at rates never before seen in history. Today, the public can go online even as a newsworthy event is still unfolding and read high quality, accurate coverage. However, as events constantly evolve, so do the narratives used to convey information. New people and locations can appear in follow up stories, while inital players fade from the ongoing coverage. Stories that are initially reported as one thing can become something else entirely by the time coverage ends…

Early Corona Virus Reports in the UK...

This Guardian front page from Jan 27, 2020 reports on what would become the Corona Virus Global Pandemic

Original Article

Less than Two Months Later

Front page articles on March 17, 2020 from UK news sources announce shut downs to stop the spread.

Original Article

Keeping up with the constant evolution of current events or trying to piece together past events can be confusing

Machine Learning can help find narrative chains and determine how stories change over time!

Business Use Cases

Media & Geo-Political Monitoring

Track changing key stories overtime of businesses, people, or other entities important to strategy or national security

Event Response Analysis

Analyze evolution of diaster and assess where relief efforts were adequate and where relief operations could be improved

OSINT/Disinformation Detection

Compare a story’s various narratives and threads to pinpoint where misinformation entered a storyline

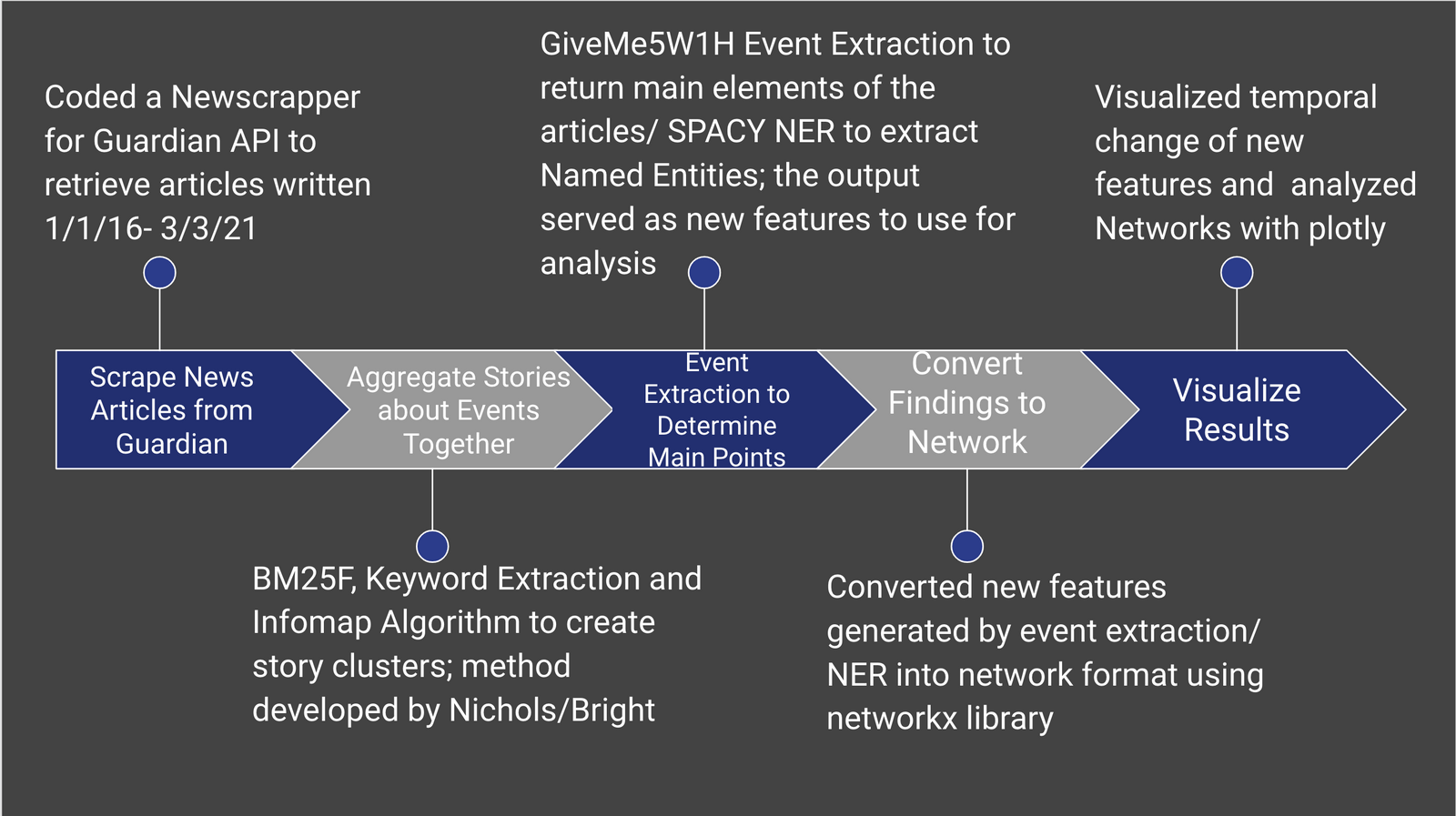

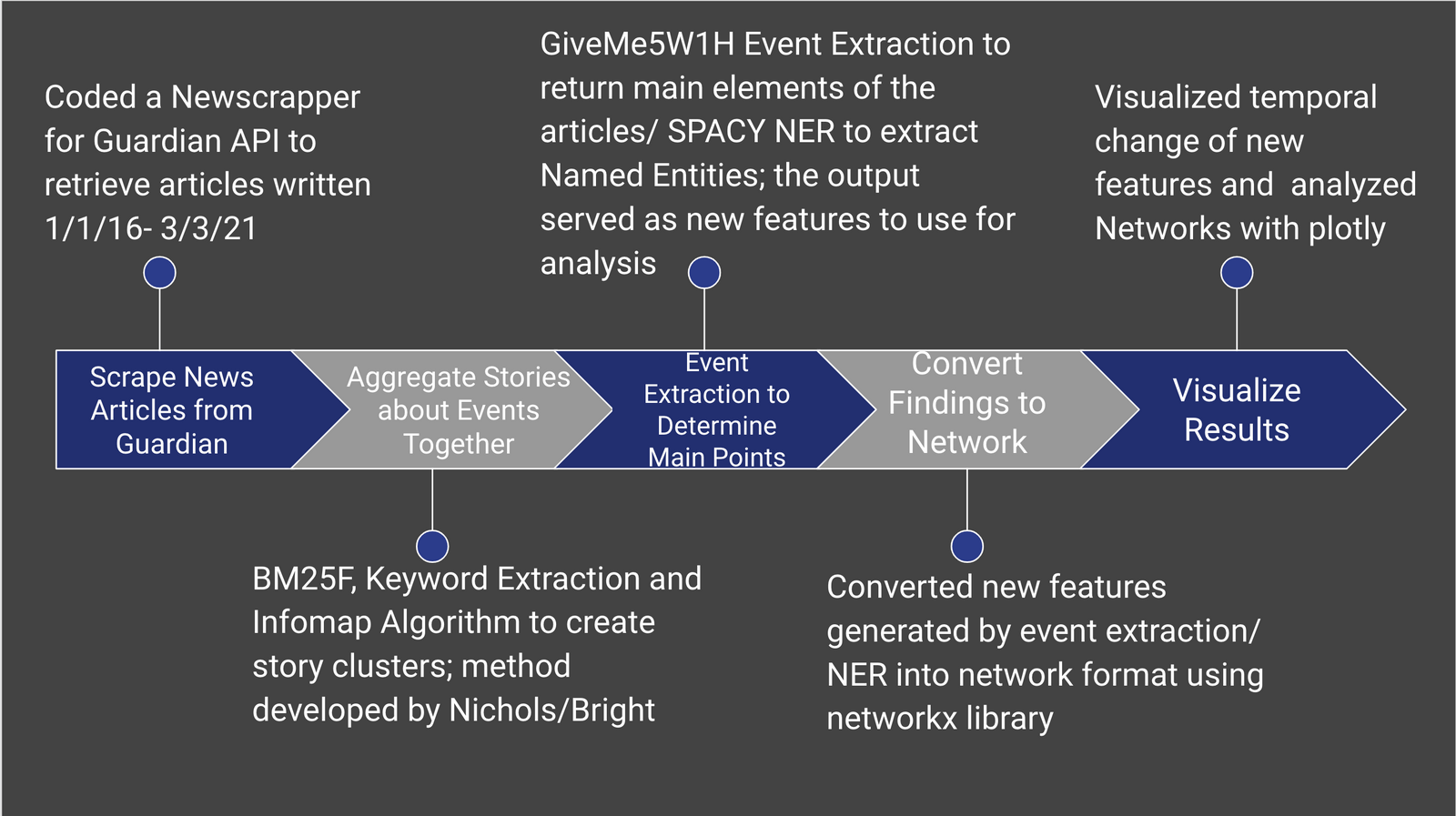

Methodology

This project had the following main steps:

1. Scrape real news articles from data source (Guardian API, 1/1/16-3/32021; Politics, US News, UK News, Global Development, World News)

2. Aggregate the articles into clusters (used combination of key word importance measures,

BM25F, & Infomap graph clustering algorithm)

3. Perform Event Extraction (GiveMe5W1H)

4. Named Entity Recognition (Spacy's NER Pipeline, used pipeline for People, Geopolitical Entities, Organizations Locations)

5. Convert Features returned by extraction techniques into networks

6. Graph those networks to find how the important element of stories change over time

Pipeline Elements

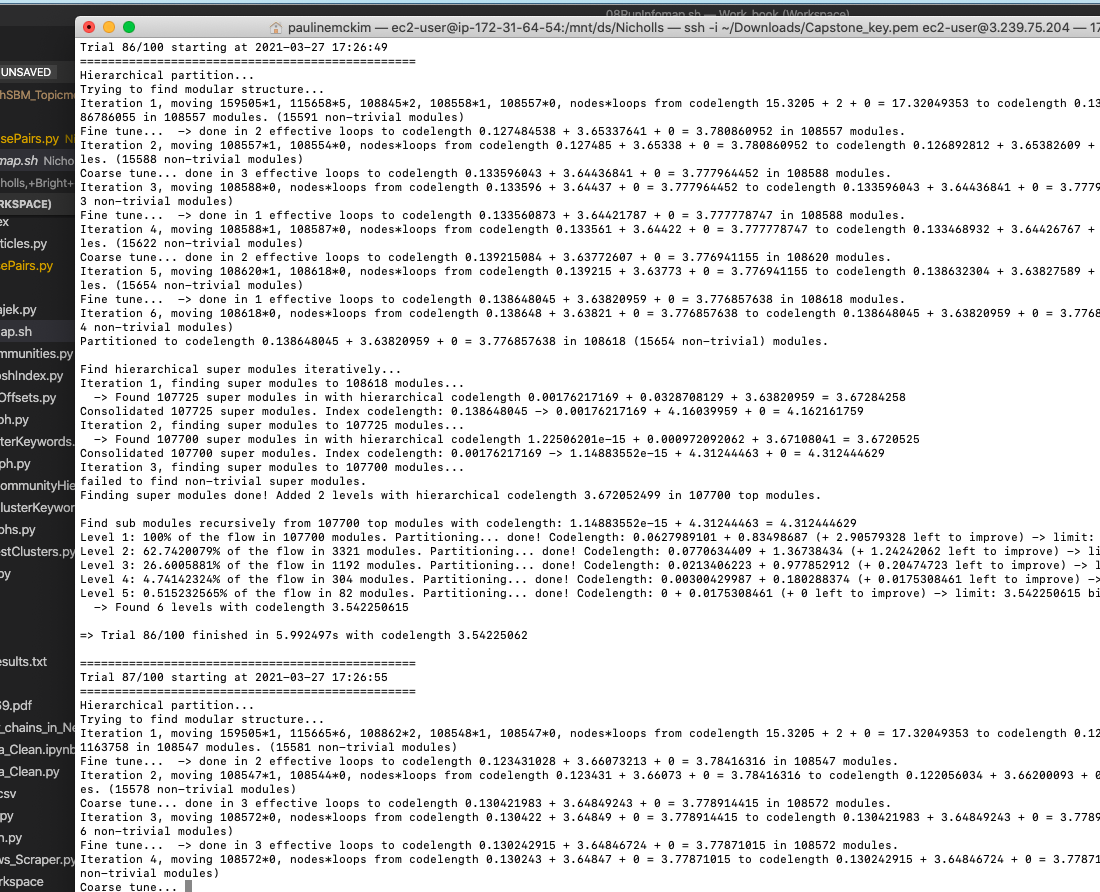

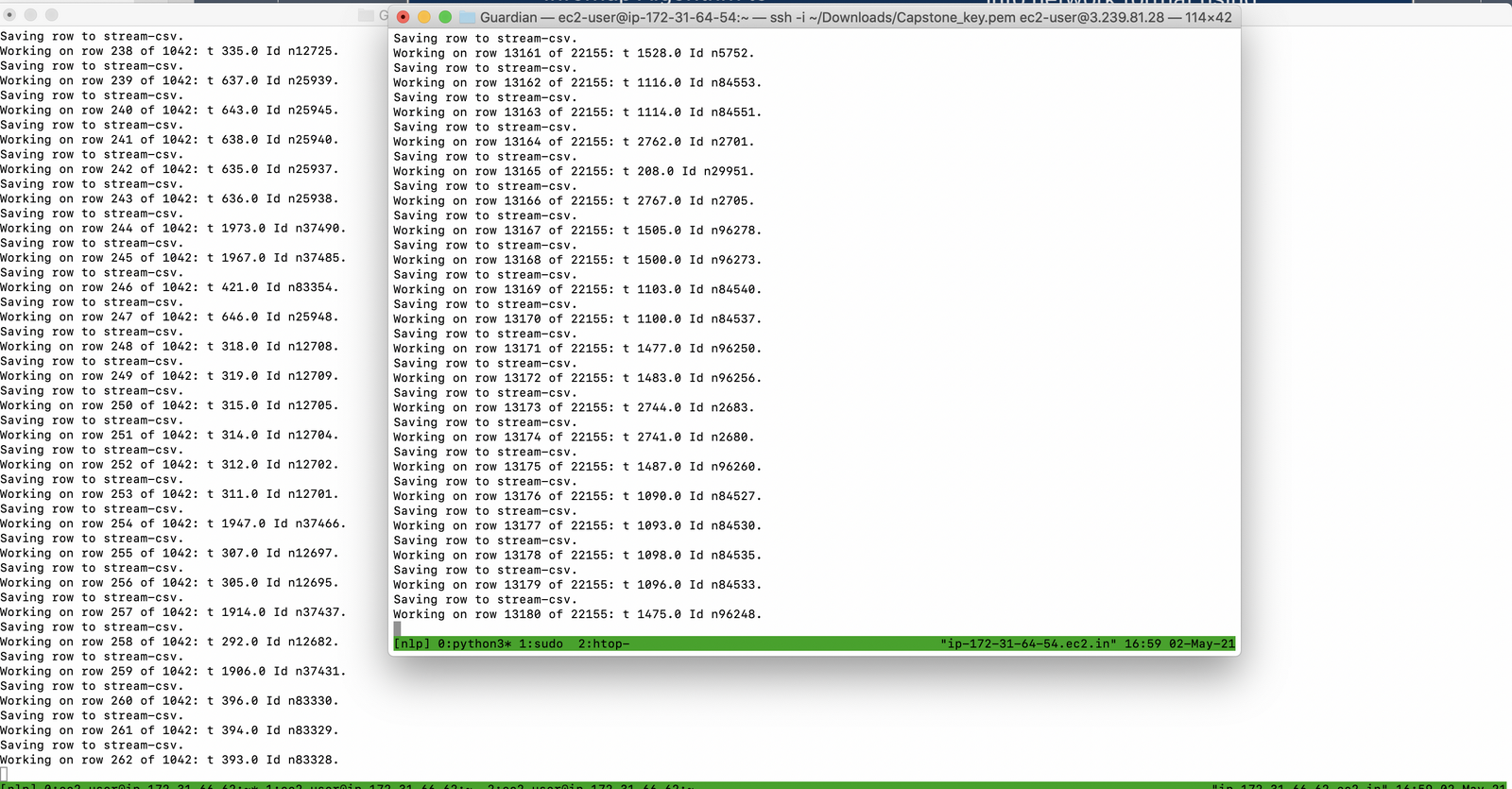

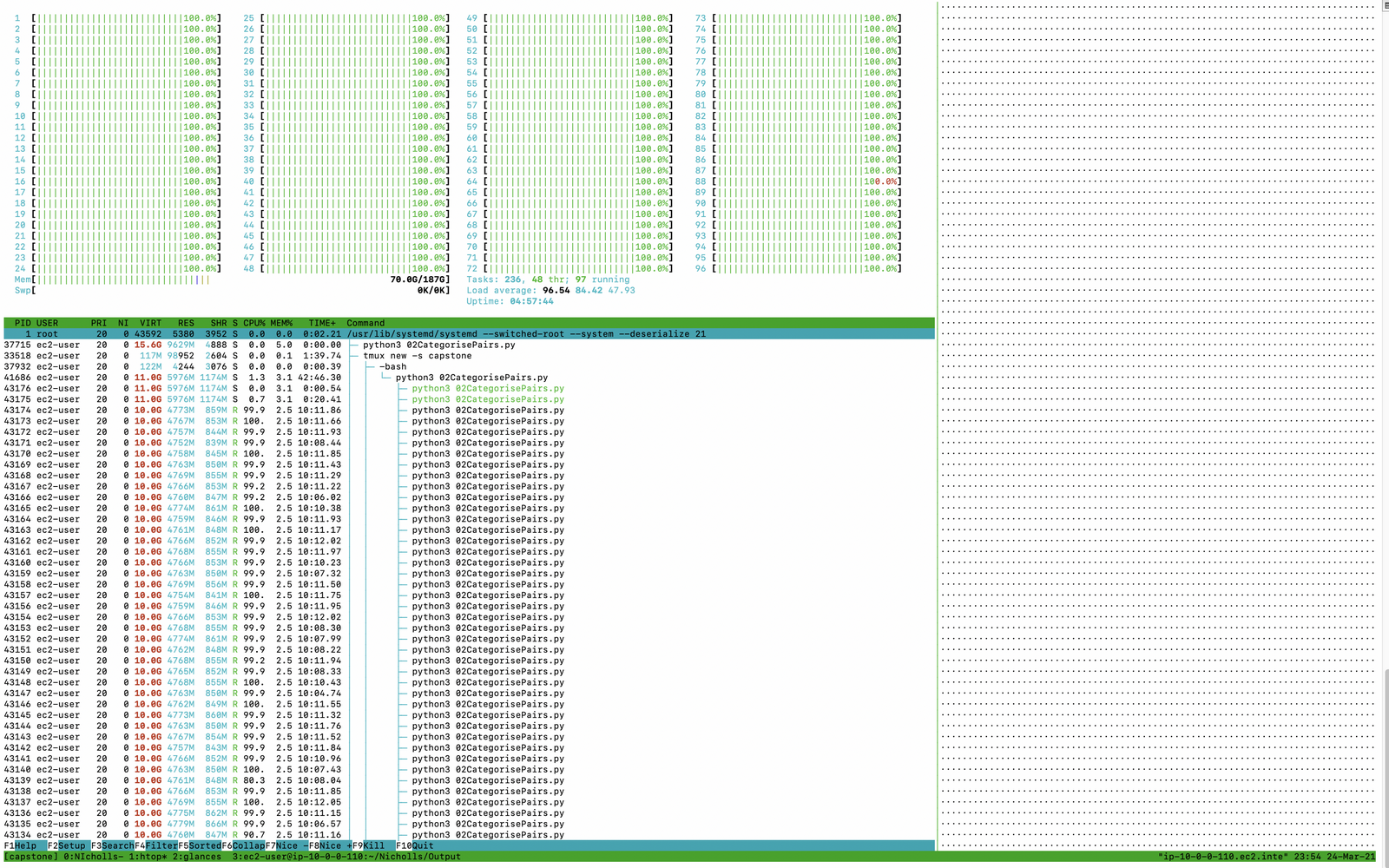

AWS Components

AWS EC2s were used to run the clustering algorithmn related scripts & event extraction components in order to speed up processing time; Terminal Multiplex (TMUX) facilitated persistent sessions to enable asynchonus data processing